Product Attributes

UCSD MGT 100 Week 03

Let’s reflect

Market Mapping

- Positioning in attribute space

- Economic theories of differentiation: Vertical, horizontal

- Perceptual maps

Marketing strategy

Segmentation: How do customers differ

Targeting: Which segments do we seek to attract and serve

Positioning

- What value proposition do we present - How do our product's objective attributes compare to competitors - Where do customers perceive us to be - How do we want to influence consumer perceptionsMarket mapping helps with Positioning

Market Maps

Market maps use customer data to depict competitive situations. Why?

- Understand brand/product positions in the market - Track changes - Identify new products or features to develop - Understand competitor imitation/differentiation decisions - Evaluate results of recent tactics - Cross-selling, advertising, identifying complements or substitutes, bundles...

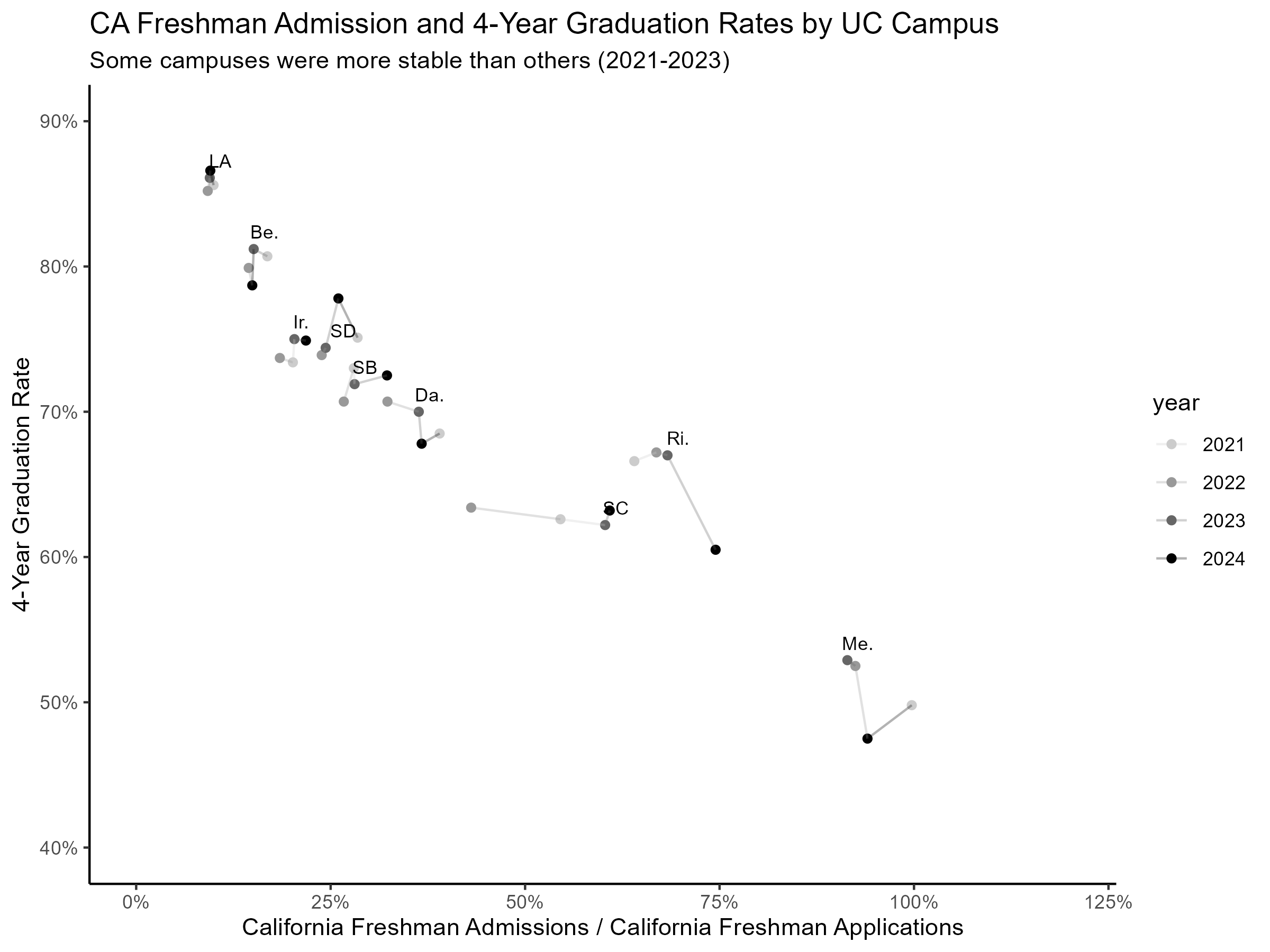

Market maps

We often lack ground truth data

- Using a single map to set strategy is riskyRepeated mapping builds confidence

(“Movies, not pictures”)Many large brands do this regularly

Vertical Diff., AKA quality

- Product attributes where more is better, all else constant

- Efficacy, e.g. CPU speed or horsepower

- Efficiency, e.g. power consumption

- Input good quality (e.g. clothes, food)

- Important: not everyone buys the better option (why not?)

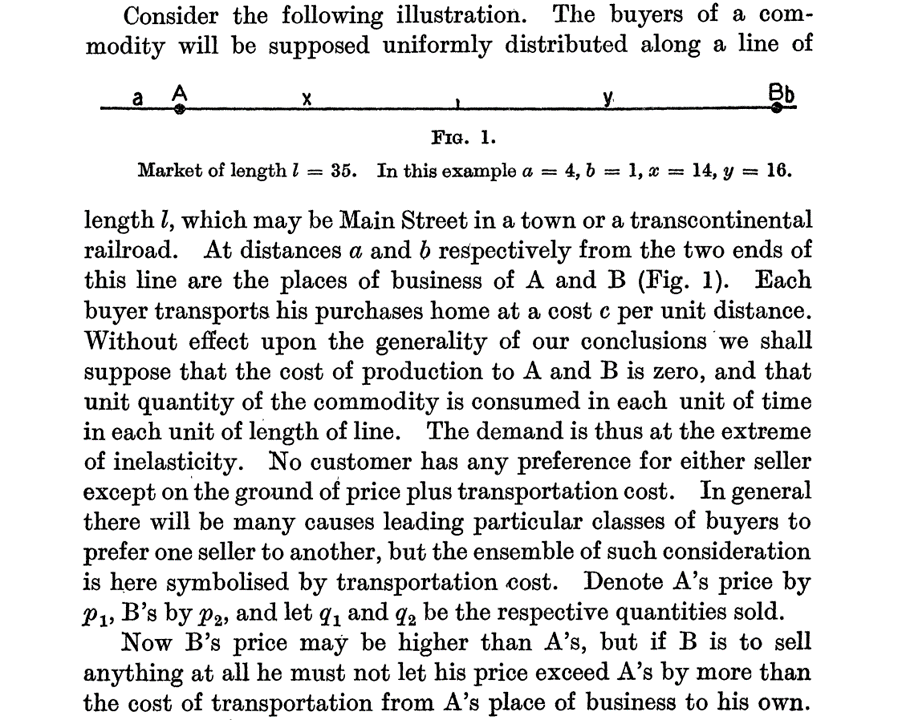

Horizontal Diff., AKA fit or match

- Product attributes w heterogeneous valuations

- Physical location

- Familiarity, e.g. what you grew up with

- Taste, e.g. sweetness or umami

- Brand image, e.g. Tide, Jif, Coca-Cola

- Complements, e.g. headphones or charging cables

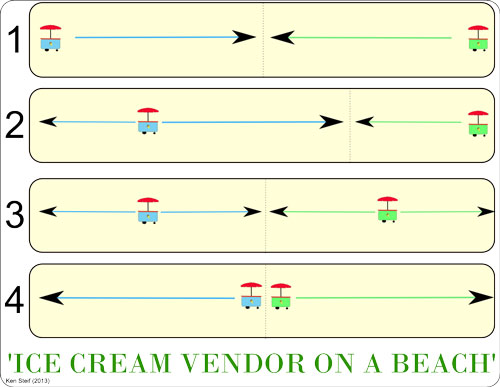

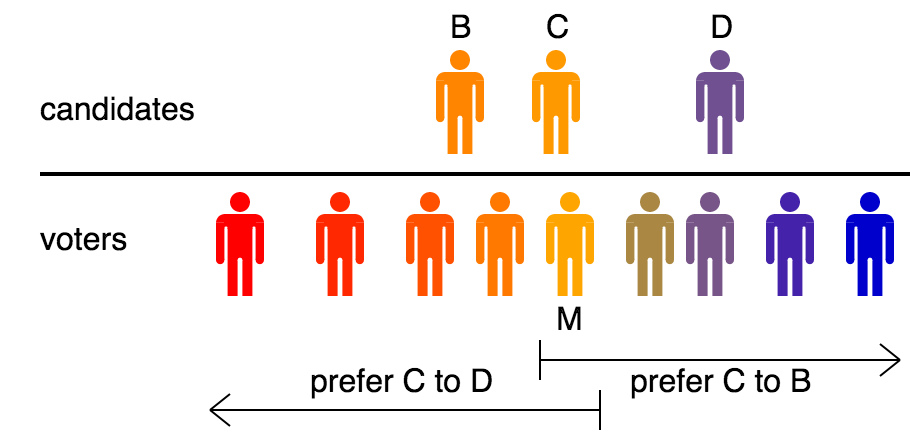

Hotelling (1929)

Ice cream vendors

Median voter theorem

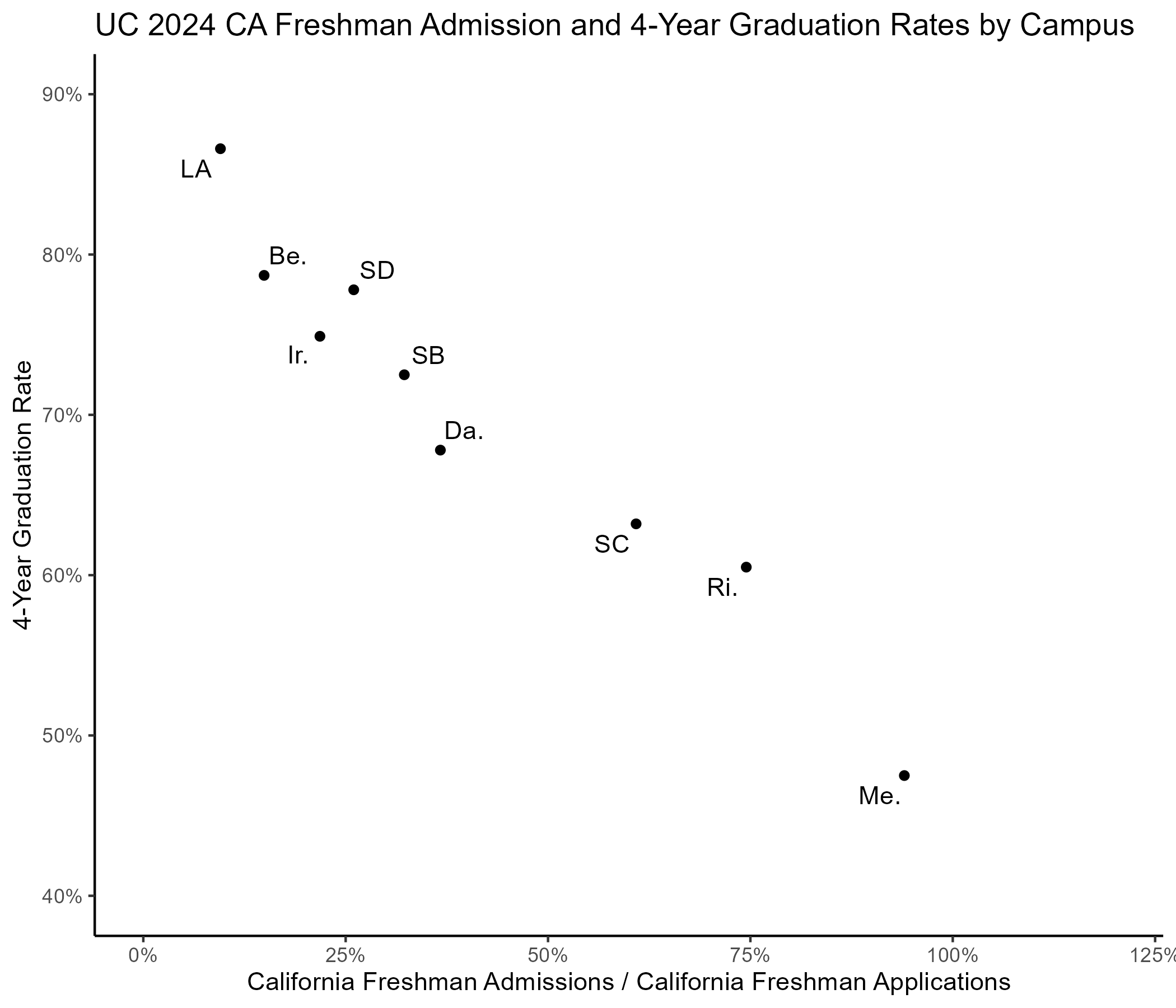

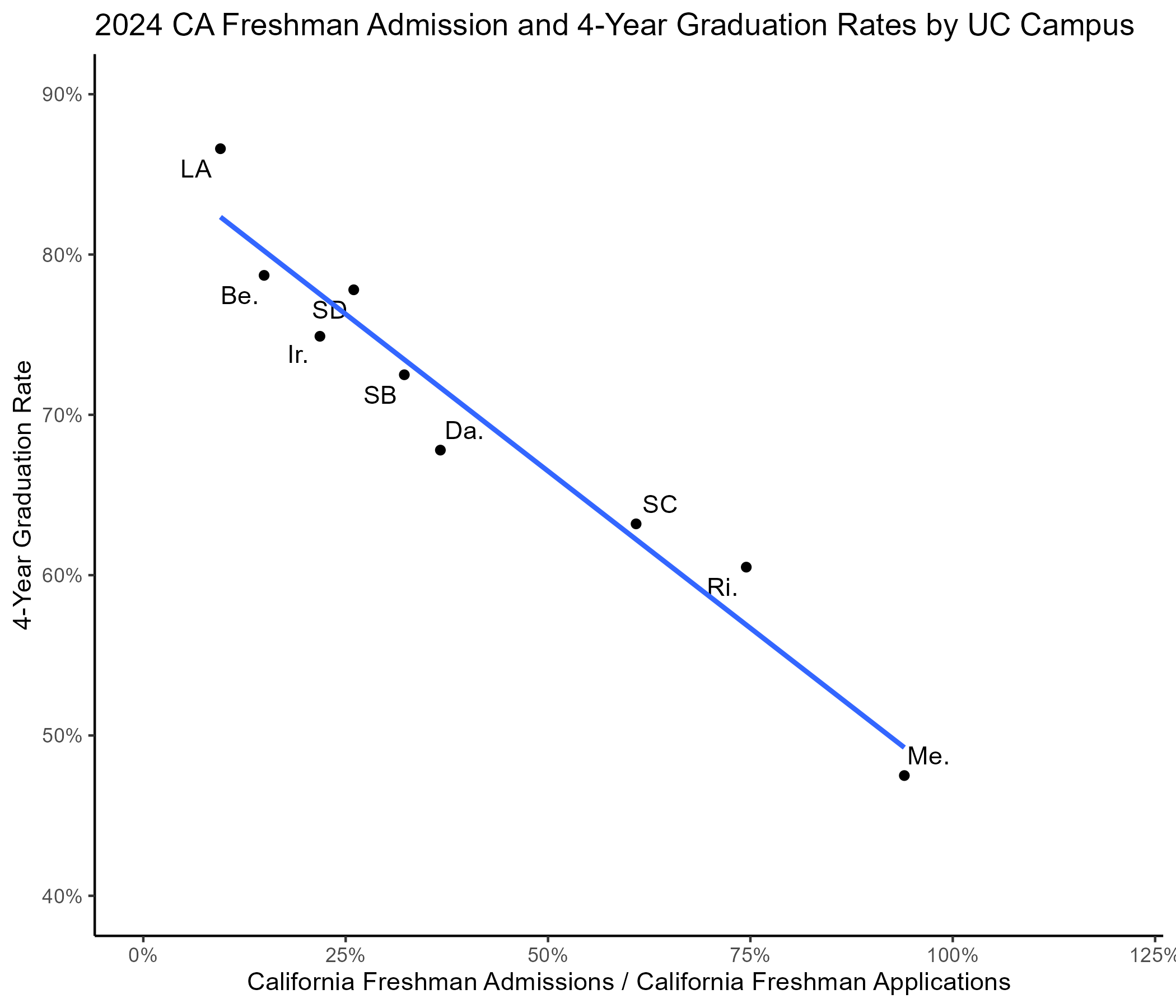

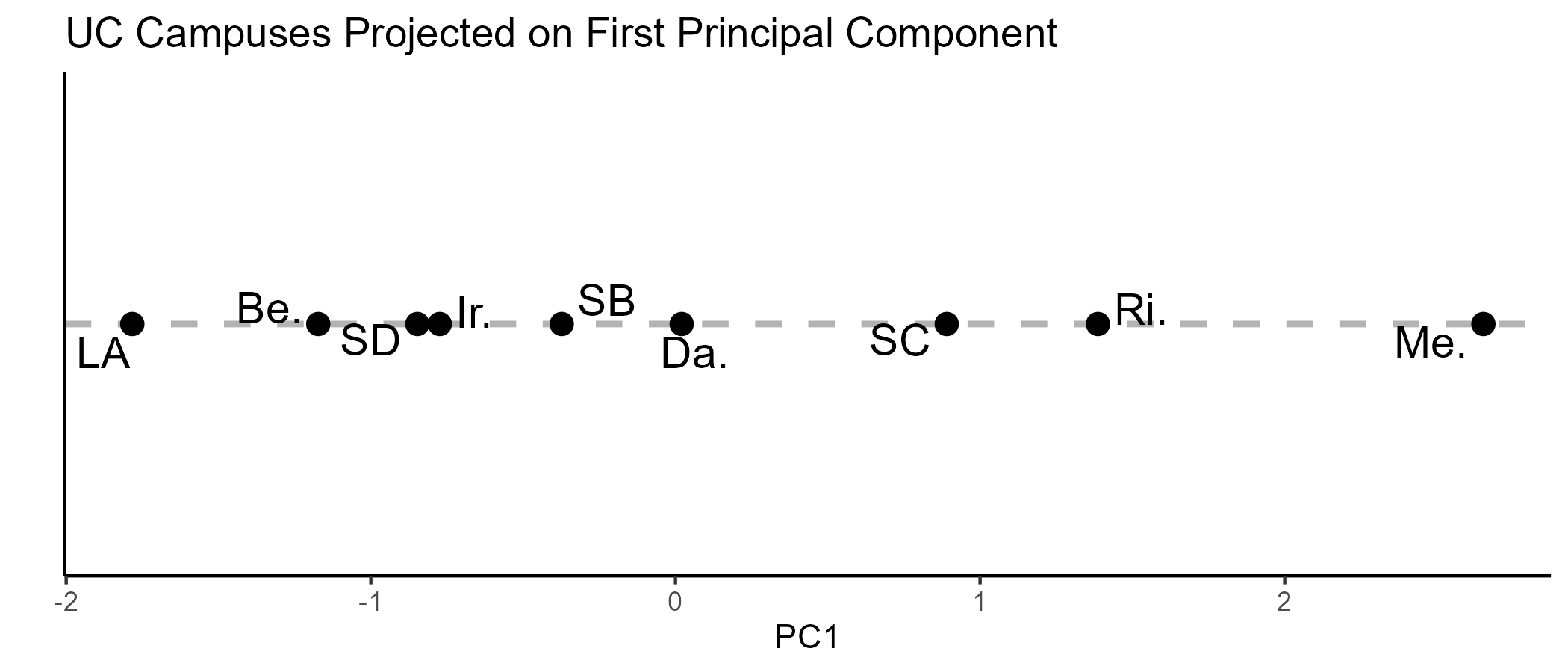

Suppose you are the UCSD Chancellor

You want to know how much each UC Campus competes with you for California freshman applicants

You posit that selectivity and time-to-degree matter most

- Students want to connect with smart students - Students want to graduate on time

What if there are too many product attributes to graph?

Enter Principal Components Analysis

- Powerful way to summarize data - Projects high-dimensional data into a lower dimensional space - Designed to minimize information loss during compression - Pearson (1901) invented; Hotelling rediscovered (1933 & 36)

Principal Components Analysis (PCA)

For \(J\) products with \(K<J\) continuous attributes,

we have \(X\), a \(J\times K\) matrixConsider this a \(K\)-dimensional space containing \(J\) points

Calculate \(X'X\), a \(K \times K\) covariance matrix of the attributes

1st \(x\) eigenvectors of the attribute covariance matrix give unit vectors to map products in \(x\)-dimensional space

- We'll use first 1 or 2 eigenvectors for visualization

PCA FAQ

How do I interpret the principal components?

- Each principal component is a linear combination of the larger space's axes - Principal components are the "new axes" for the newly-compressed space - Principal components are always orthogonal to each other, by constructionWhat are the main assumptions of PCA?

- Variables are continuous and linearly related - Principal components that explain the most variation matter most - Drawbacks: information loss, reduced spatial interpretability, outlier sensitivityHow do I choose the # of principal components?

- Business criteria: 1 or 2 if you want to visualize the data - Business criteria: Or, value of compressed data in subsequent operations - Statistical criteria: Cume variance explained, scree plot, eigenvalue > 1What are some similar tools to PCA?

- Factor analysis, linear discriminant analysis, independent component analysis...

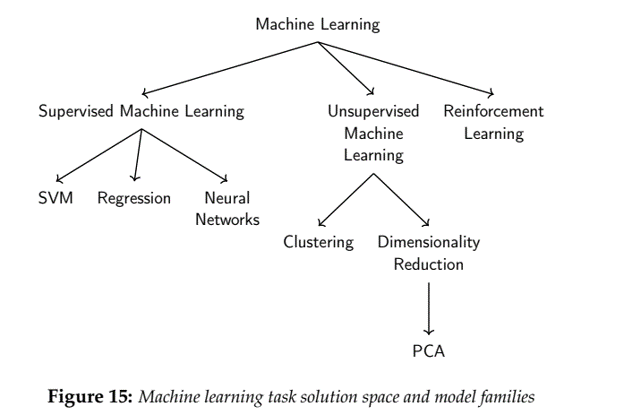

How does PCA relate to K-means?

K-Means identifies clusters within a dataset

- K-Means augments a dataset by identifying similarities within it - K-Means never discards dataPCA combines data dimensions to condense data with minimal information loss

- PCA is designed to optimally reduce data dimensionality - PCA facilitates visual interpretation but does not identify similaritiesBoth are unsupervised ML algos

- Both have "tuning parameters" (e.g. # segments, # principal components) - They serve different purposes & can be used together - E.g. run PCA to first compress large data, then K-Means to group points - Or, K-Means to identify clusters, then PCA to visualize them in 2D space

Conceptual organization

Mapping Practicalities

How to measure intangible attributes like trust?

- Ask consumers, e.g. "How much do you trust this brand?" - Marketing Research exists to measure subjective attributes and perceptionsWhat if we don’t know, or can’t measure, the most important attributes?

- Multidimensional scalingHow should we weigh attributes?

Do we know the most important attributes?

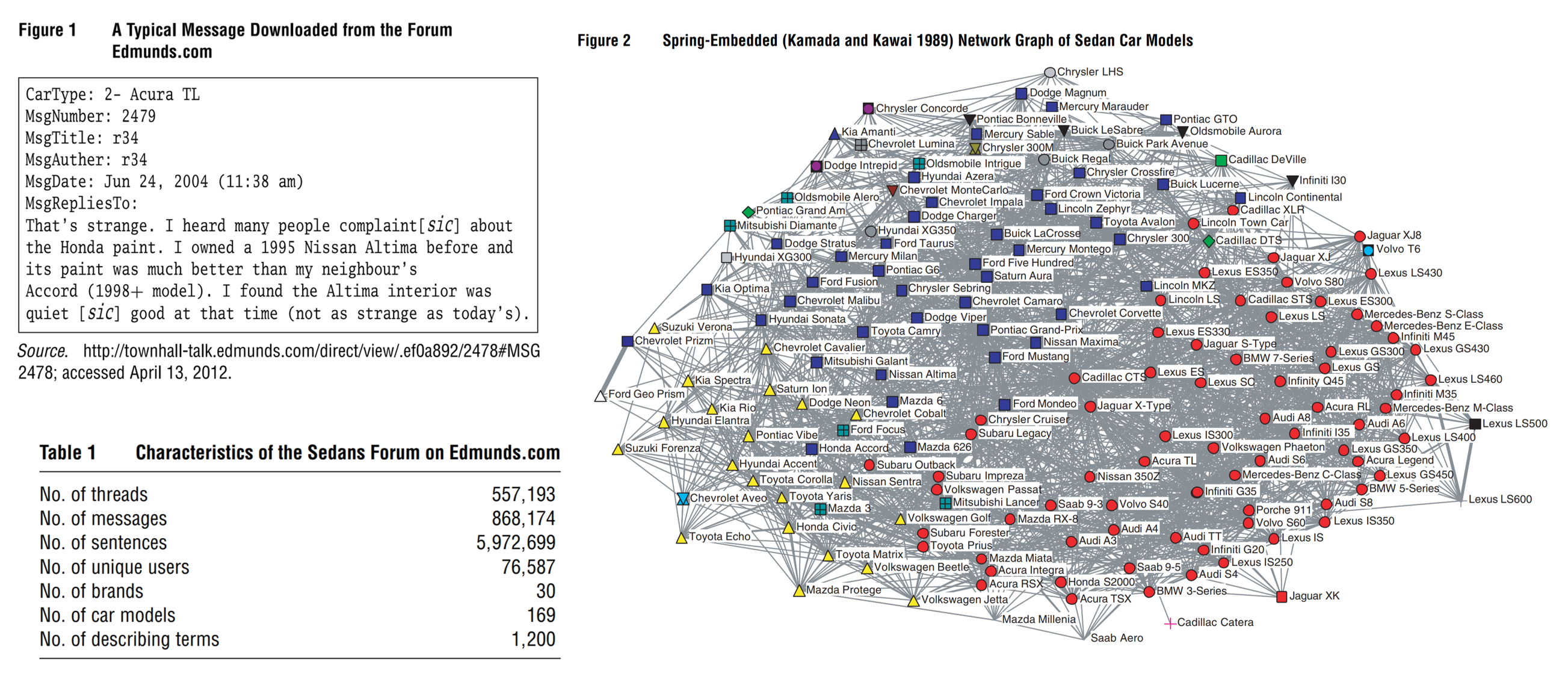

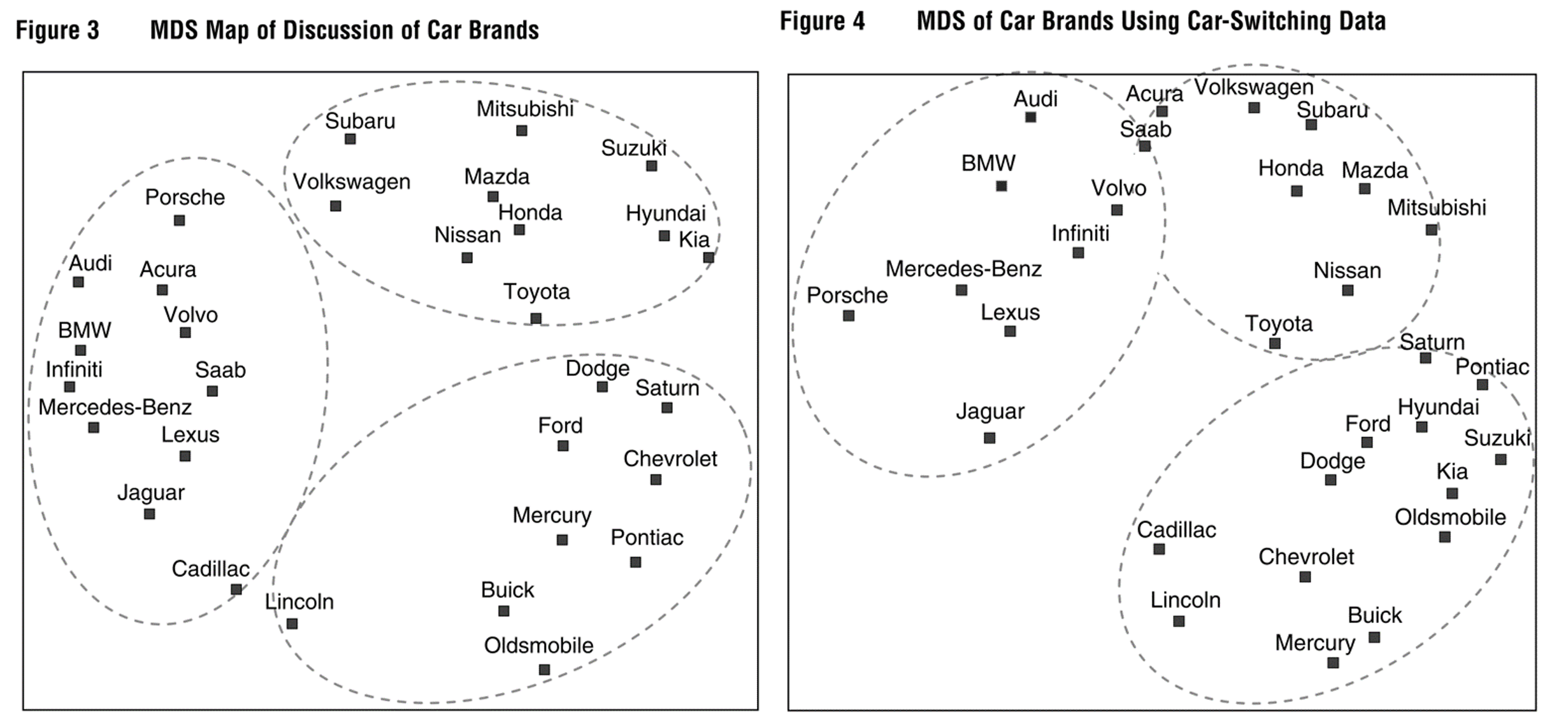

- Multidimensional scaling draws perceptual maps

Suppose you can measure product similarity

For \(J\) products, populate the \(J\times J\) matrix of similarity scores

- With J brands, we have J points in J dimensions. Each dimension j indicates similarity to brand j. PCA can projects J dimensions into 2D for plottingUse PCA to reduce to a lower-dimensional space

- Pro: We don't need to predefine attributes - Con: Axes can be hard to interpret

Multidimensional scaling

MDS Intuition, in 2D space

- With a ruler and map, measure distances between 20 US cities ("similarity")

- Record distances in a 20x20 matrix: PCA into 2D should recreate the map

- But, we don't usually know the map we are recreating, so we look for ground-truth comparisons to indicate credibility and reliability

Examples:

- Poli Sci: Which political candidate positioning, eg left to right

- Psychologists: understand perceptions and evaluation of personality traits

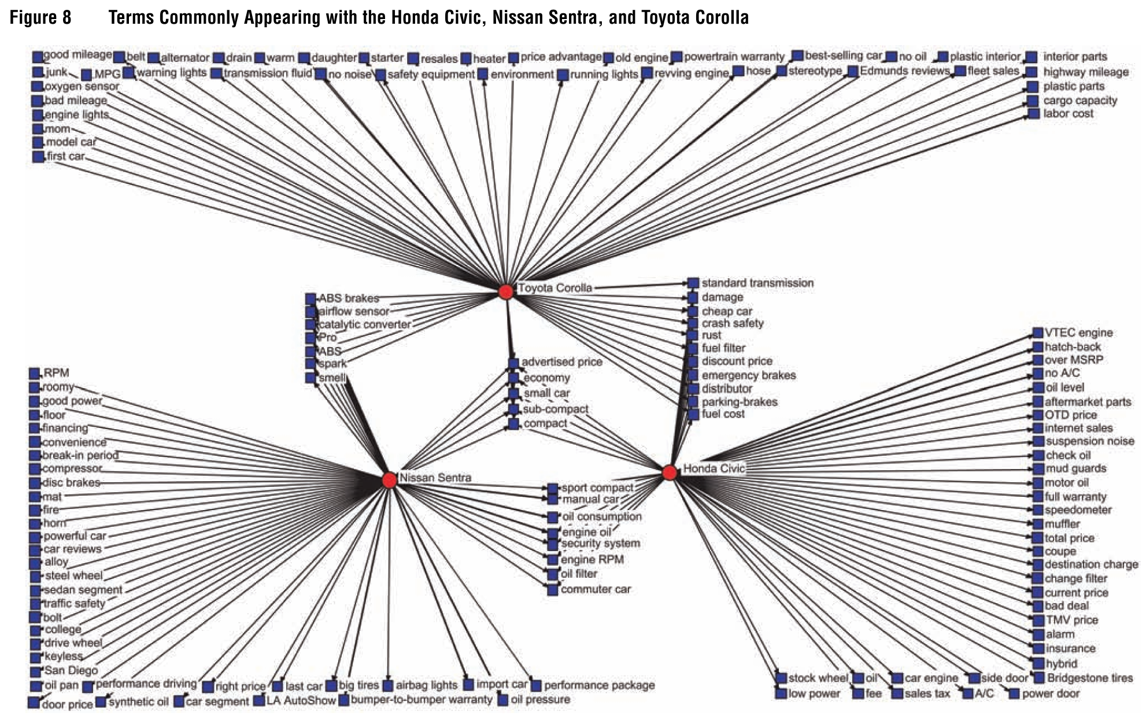

- Marketers: how consumers perceive brands or perceive product attributesExample: Netzer et al. (2012)

How to weigh product attributes?

Demand modeling uses product attributes and prices to explain customer purchases

Heterogeneous demand modeling uses product attributes, prices and customer attributes to explain purchases

- "Revealed preferences": Demand models explain observed choices in uncontrolled market environmentsRelated: Conjoint analysis estimates attribute weights in simulated choice environments

- "Stated preferences": Conjoint explain hypothetical choice data in controlled experiments

Text data

- The Challenge

- Embeddings

- LLMs: What are they doing

- What does it all mean?

The Challenge

Suppose an English speaker knows \(n\) words, say \(n=10,000\)

How many unique strings of \(N\) words can they generate?

- N=1: 10,000 - N=2: 10,000^2=100,000,000 - N=3: 10,000^3=1,000,000,000,000=1 Trillion - N=4: 10,000^4=10^16 - N=5: 10,000^5=10^20 - N=6: 10,000^5=10^24=1 Trillion Trillions - ....Why do we make kids learn proper grammar?

- Average formal written English sentence is ~15 words

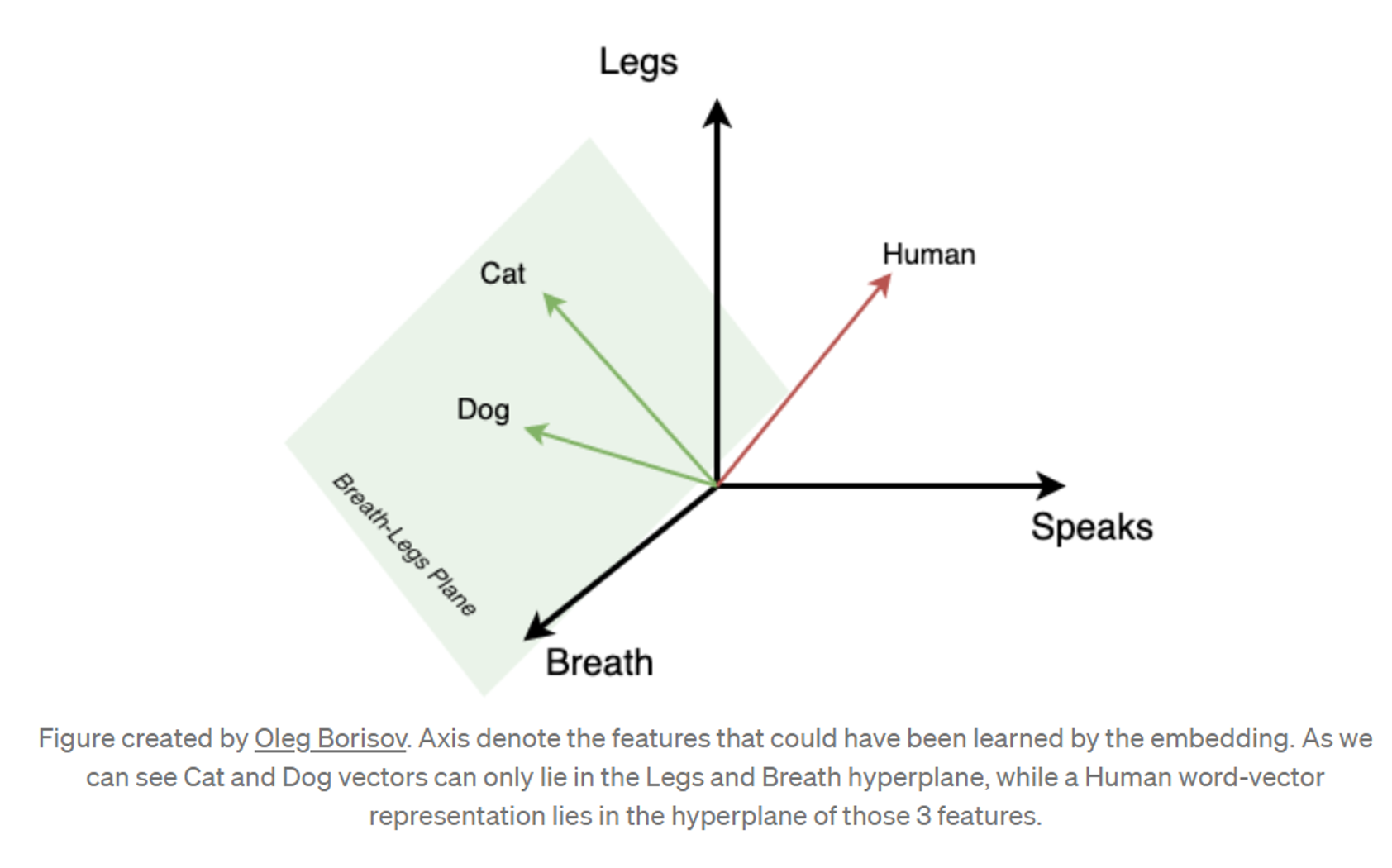

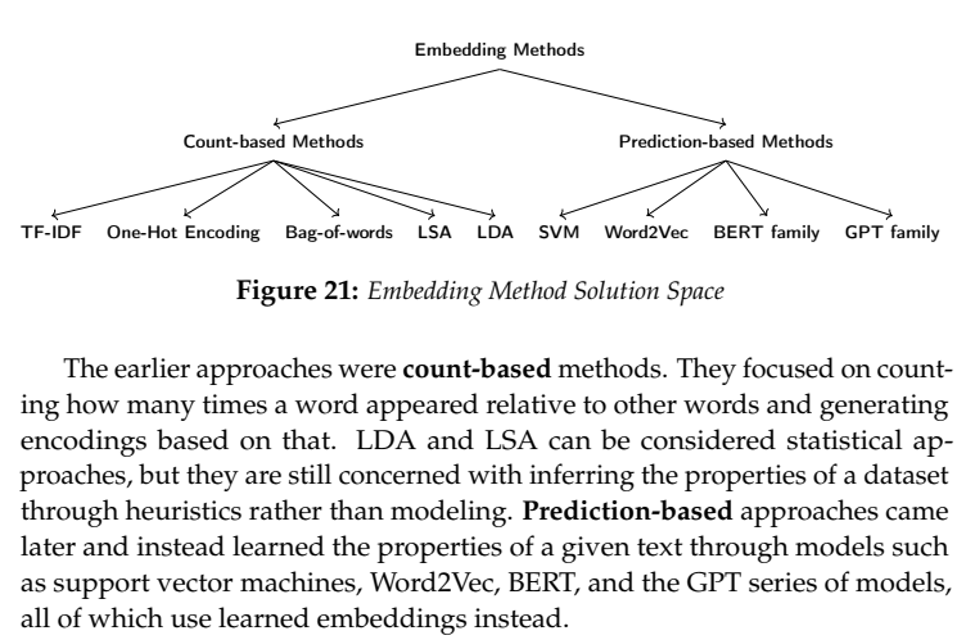

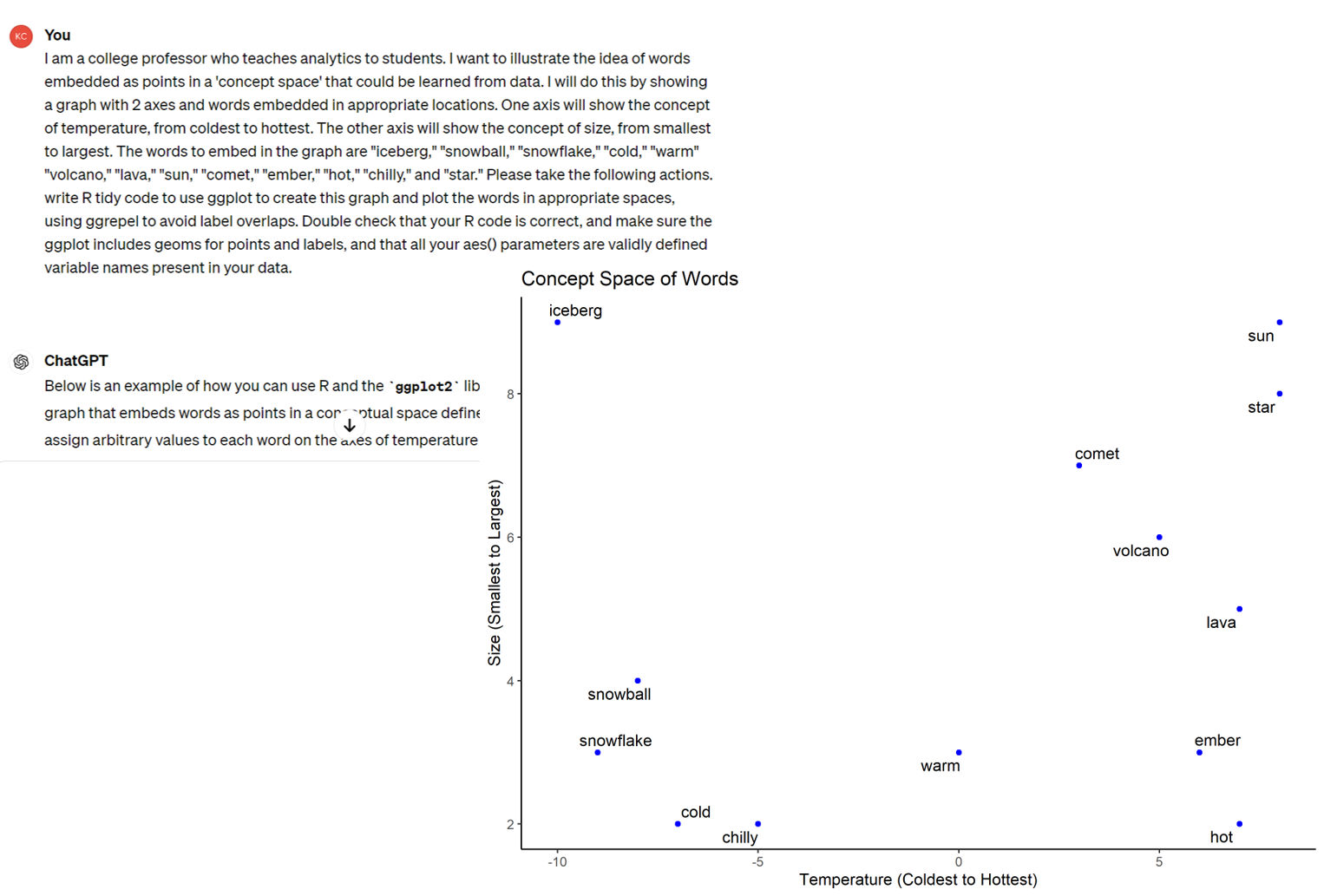

Embeddings

represent words as vectors in high-dim space

- Really, "tokens," but assume words==tokens for simplicityAssume \(W\) words, \(A<W\) abstract concepts

- Assume we have all text data from all history. Each sentence is a point in $W$-dimensional spaceWe could run PCA to reduce from \(W\) to \(A\) dimensions

- Assume we have infinite computing resources - We now have every sentence represented as a point in continuous A-space

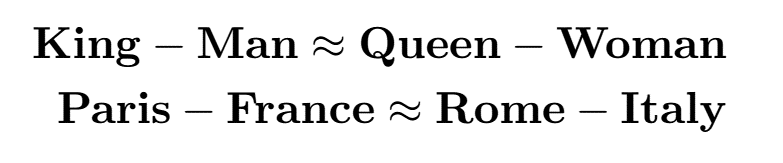

Cool things about embeddings

- Compression stores enormous textual data in a small space, other than human memory

- We can do math using words!

Many ways to encode embeddings

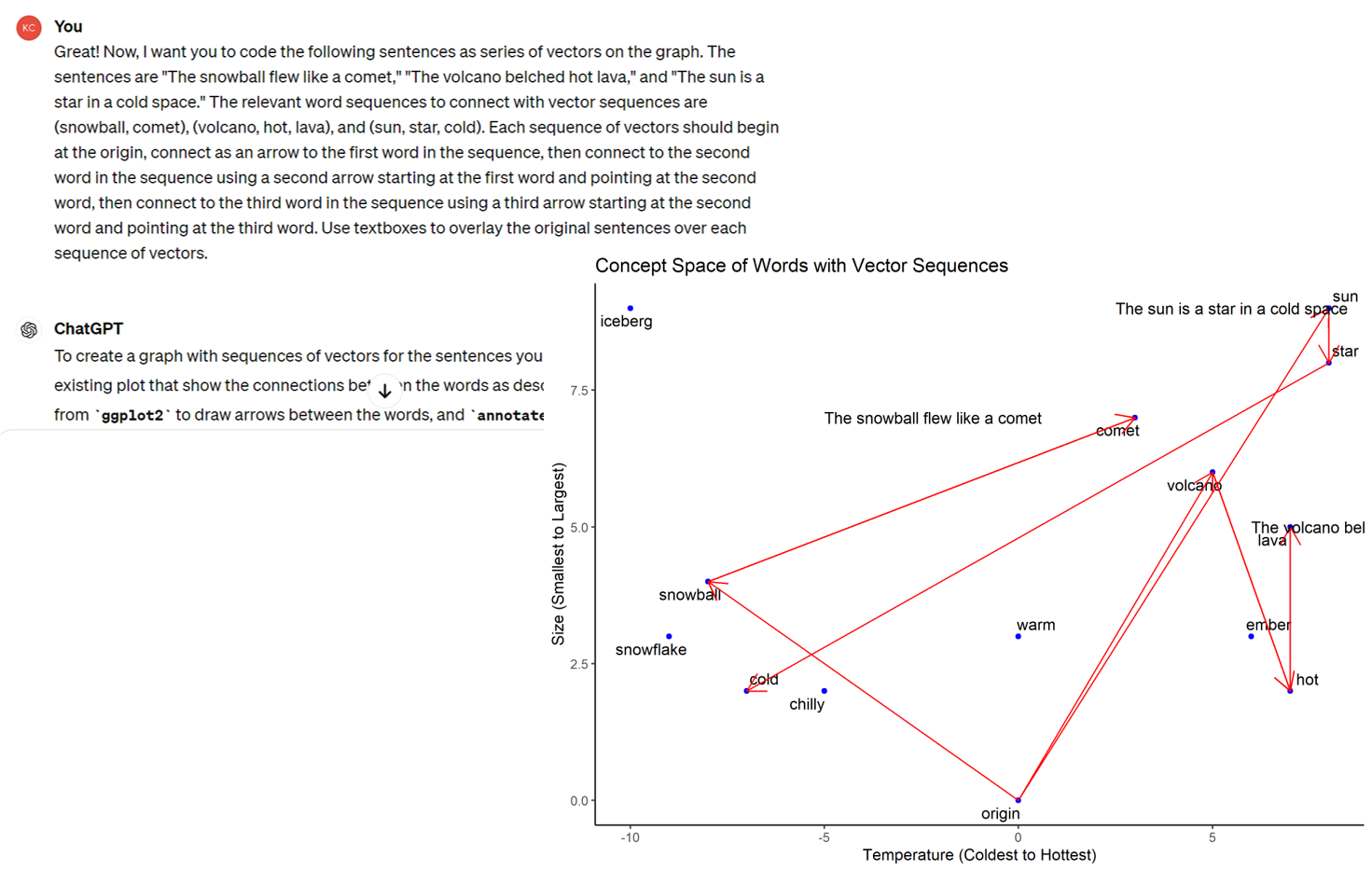

LLMs: Given a prompt,

Recode prompt to maximize contextual understanding

- E.g. 'the bank of the river is steep' vs 'the bank near the river is solvent' - This step is the 'attention' step you hear a lot about - Basically, modify every word's location depending on every other word's position in the prompt sequenceFeed recoded prompt into transformer as a sequence of points in concept-space

Predict the next point and add it to the sequence

Repeat step 3 until no more good predictions

Repeat steps 1-4 many many times, then hire humans to evaluate results, use evaluations for RLHF to refine the process

Sell access to customers

- Use the money to train a bigger LLM

Example: Concept Space

Example: Sentences as Vector Sequences

What LLMs Can and Can’t Do

Can generate intelligible semantic sequences

Can help humans save time and effort in semantic tasks

Can uncover previously unknown relations in training data

Can enable semantic analysis of product review corpuses to understand customer perceptions, evaluations and satisfaction

Can’t distinguish truth from frequency in training data

- Need a conceptual model of the world for this - LLMs propagate popular biases in training data, unless taught otherwiseCan’t reliably evaluate previously-unknown relationships in training data

- At least, not by themselves, or not yet; but maybe soonCan’t discover new relationships that are not present in training data

- At least, not by themselves, or not yet; but maybe soonCan’t think, reason, imagine, feel, want, question

- But might complement other components that do these things

What happens next?

Truly, no one knows yet. The tech is far ahead of science

- LLMs are productive combinations of existing components - This has happened before: stats/ML theory chases applications - Spellchecker and calculator are wrong long-term analogiesMy guesses

- "It's easy to predict everything, except for the future." - Simple tasks: LLMs outcompete humans - Medium-complexity tasks: LLMs help low-skill humans compete - Complex tasks: Skillful LLM use requires highly skilled humans - Law matters a LOT: Personal liability, copyright, privacy, disclosure - In eqm, typical quality should rise; *not* using LLMs will handicap - Long term: More automation, more products, more concentration of capital - More word math techniques will be invented, some will be usefulWhat future new technologies might complement LLMs?

- Argument about Sentient AI comes down to this - Robots? World models? Causal reasoning engines? Volition?

- How have you seen LLMs affect the world over the past year?

Conjoint Analysis

Generate consumer choice data, analyze it to

- Use a supervised choice model to map the market

- choose locations in attribute space

- predict sales and profits

Choosing Product Attributes

Until now, we studied existing product attributes

- What about choosing new product attribute levels?

- Or what about introducing new attributes?

Enter conjoint analysis:

Survey and model to estimate attribute utilitiesProbably the most popular quant marketing framework

- Autos, phones, hardware, durables - Travel, hospitality, entertainment - Professional services, transportation - Consumer package goodsCombines well with cost data to select optimal attributes

How to do conjoint analysis

Identify \(K\) product attributes and levels/values \(x_k\)

Hire consumers to make choices

Sample from product space, record consumer choices

Specify model, i.e. \(U_j=\sum_{k}x_{jk}\beta_k-\alpha p_j+\epsilon_j\)

and \(P_j=\frac{\sum_{k}x_{jk}\beta_k-\alpha_k p_j}{\sum_l\sum_{k}x_{lk}\beta_k-\alpha p_l}\)- Beware: p is price , P is choice probability or market shareCalibrate choice model to estimate attribute utilities

Combine estimated model with cost data to choose product locations and predict outcomes

Trucks Example

Phones/Service Plans Example

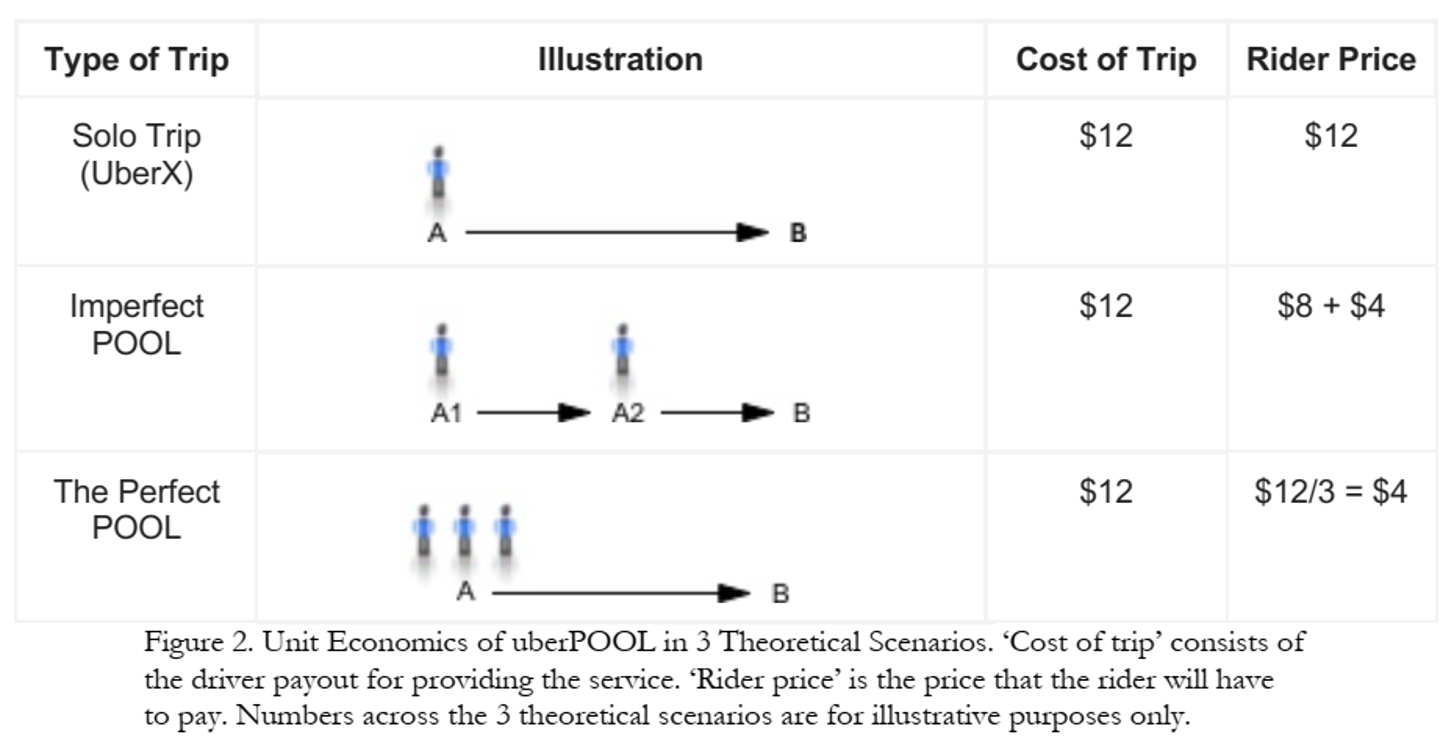

Case study: UberPOOL

In 2013, Uber hypothesized

- some riders would wait and walk for lower price - some riders would trade pre-trip predictability for lower price - shared ridership could ↓ average price and ↑ quantity - more efficient use of drivers, cars, roads, fuel

Business case was clear! But …

Shared rides were new for Uber

- Rider/driver matching algo could reflect various tradeoffs - POOL reduces routing and timing predictabilityUber had little experience with price-sensitive segments

- What price tradeoffs would incentivize new behaviors? - How much would POOL expand Uber usage vs cannibalize other services?Coordination costs were unknown

- "I will never take POOL when I need to be somewhere at a specific time" - Would riders wait at designated pickup points? - How would comunicating costs upfront affect rider behavior?So, Uber used market research to design UberPOOL

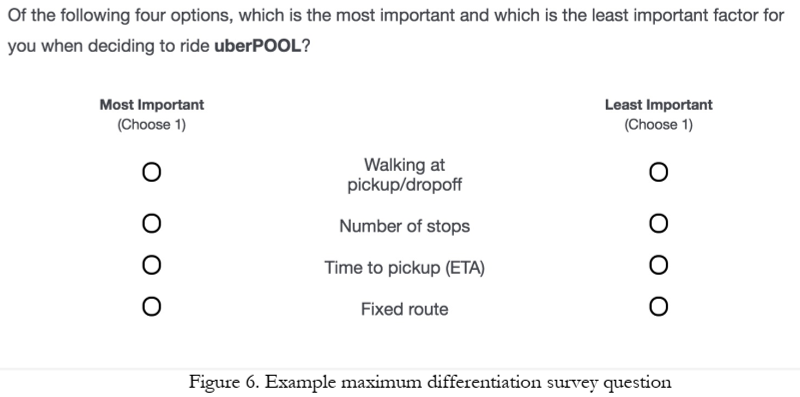

Approach

23 in-home diverse interviews in Chicago and DC

- Interviewed {prospective, new, exp.} riders to (1) map rider's regular travel, (2) explore decision factors and criteria, (3) a ride-along for context - Findings identified 6 attributes for testingOnline Maximum Differentiation Survey

- Selected participants based on city, Uber experience & product; N=3k, 22min

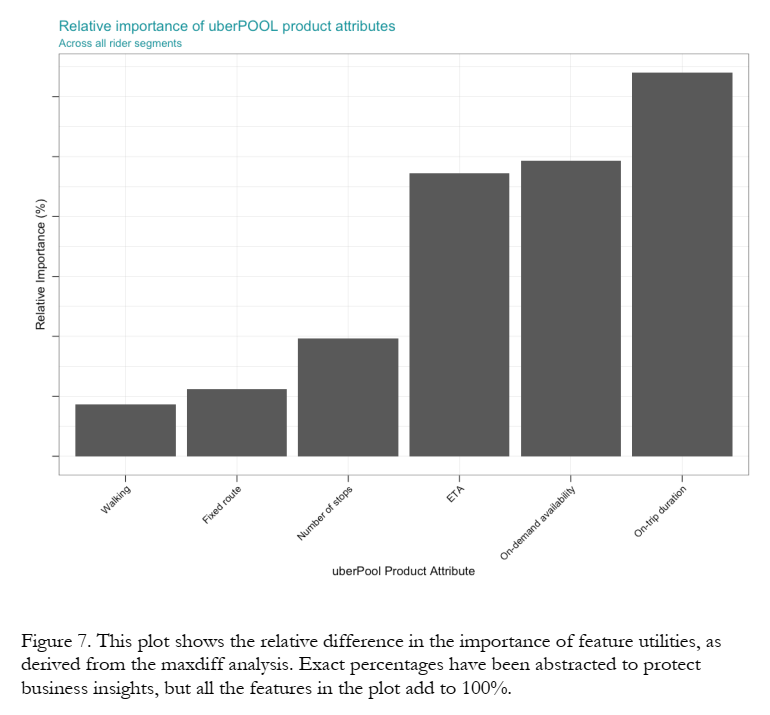

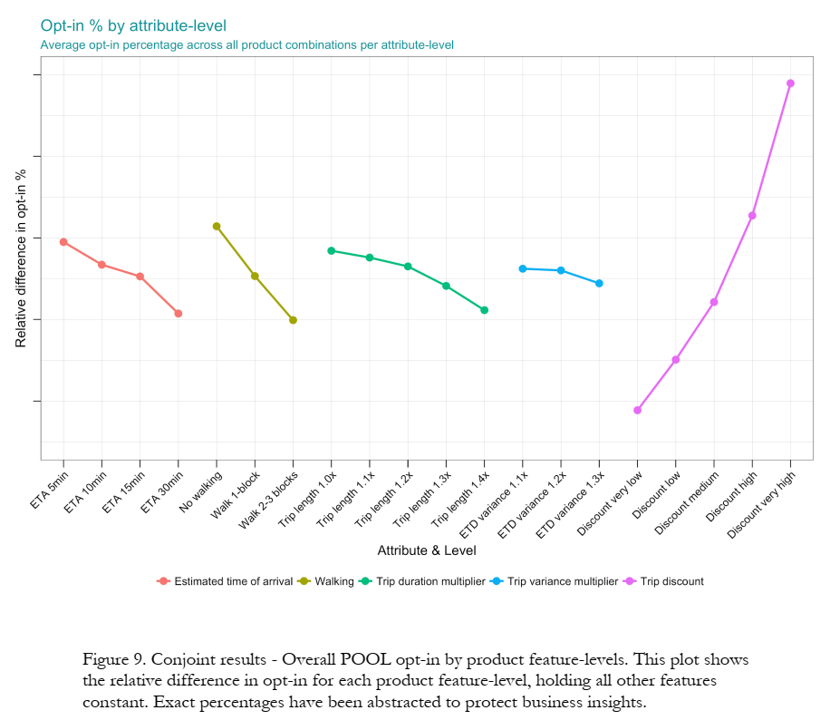

Maxdiff results

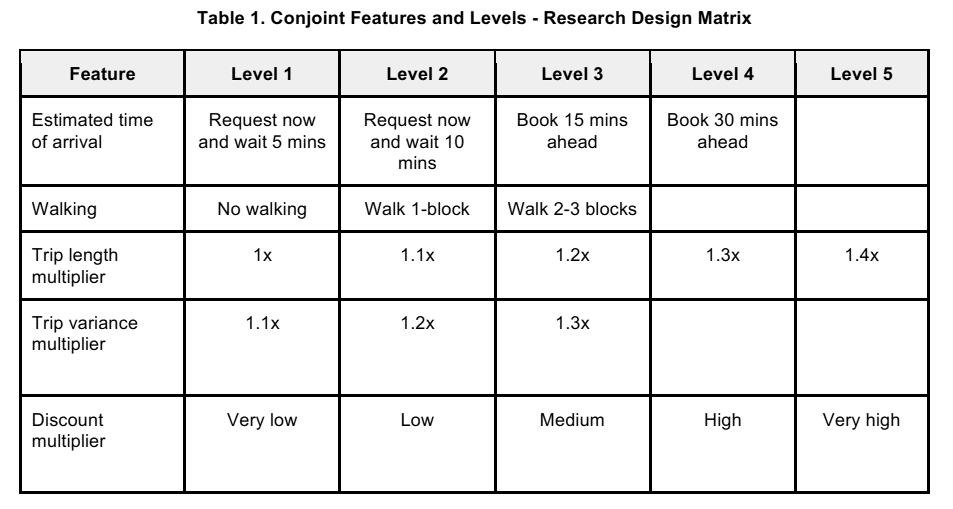

Conjoint Design

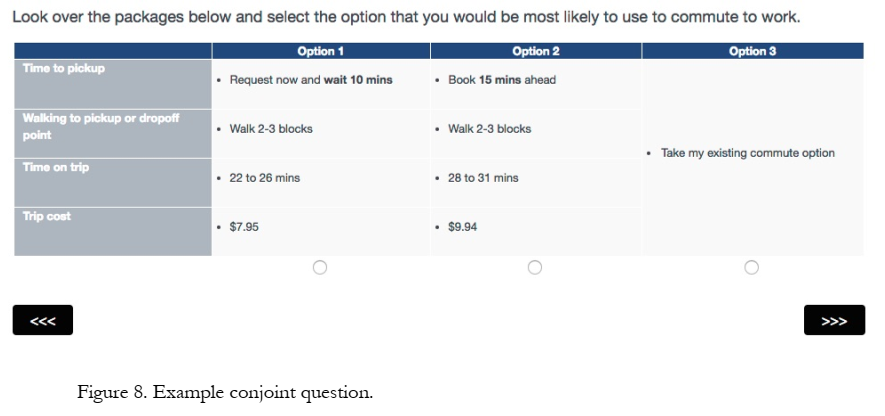

Conjoint Sample Question

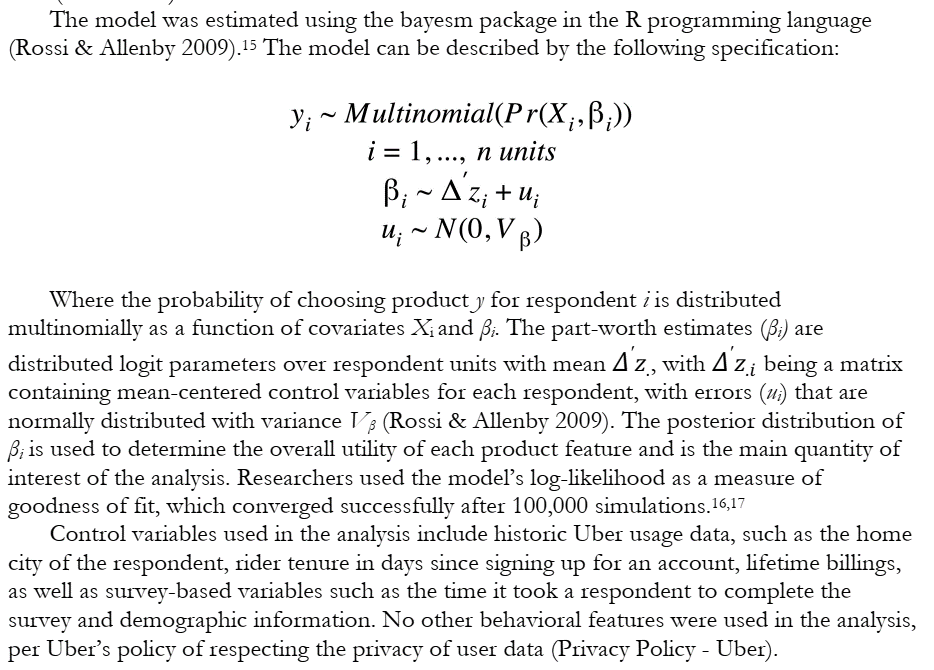

Conjoint Model

Conjoint Findings

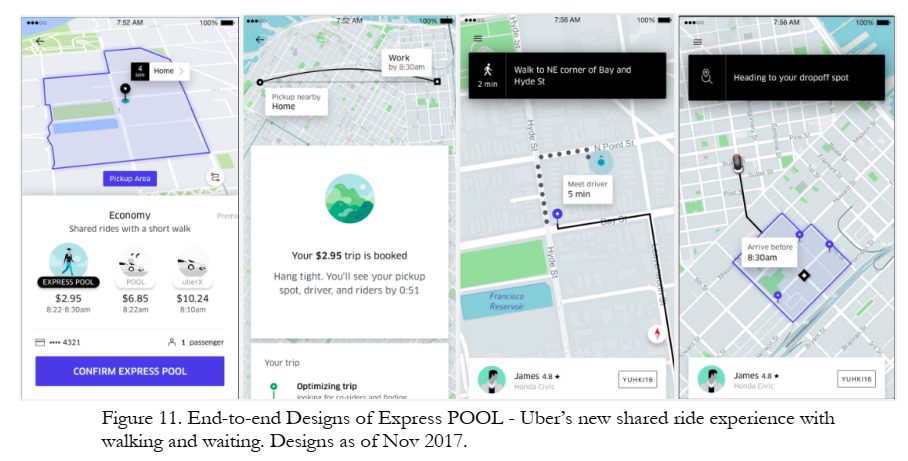

Product Redesign

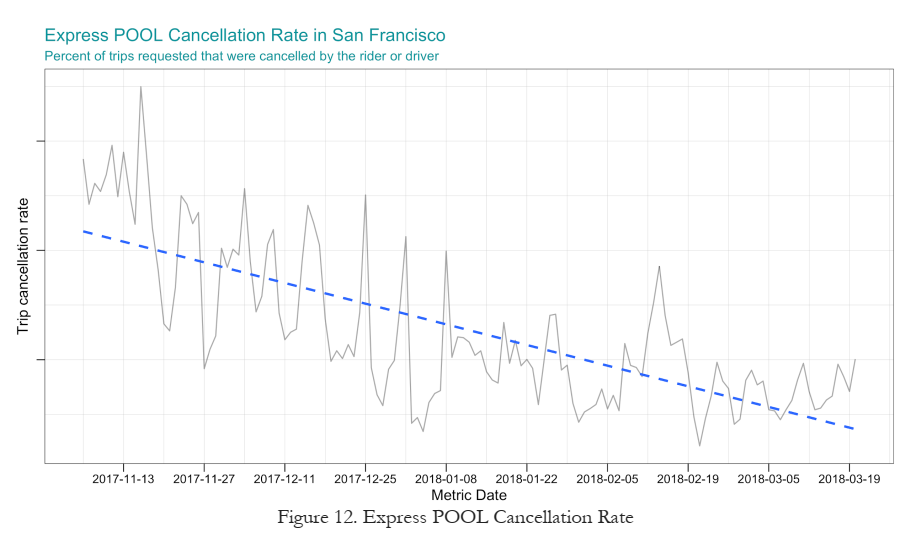

Business Results

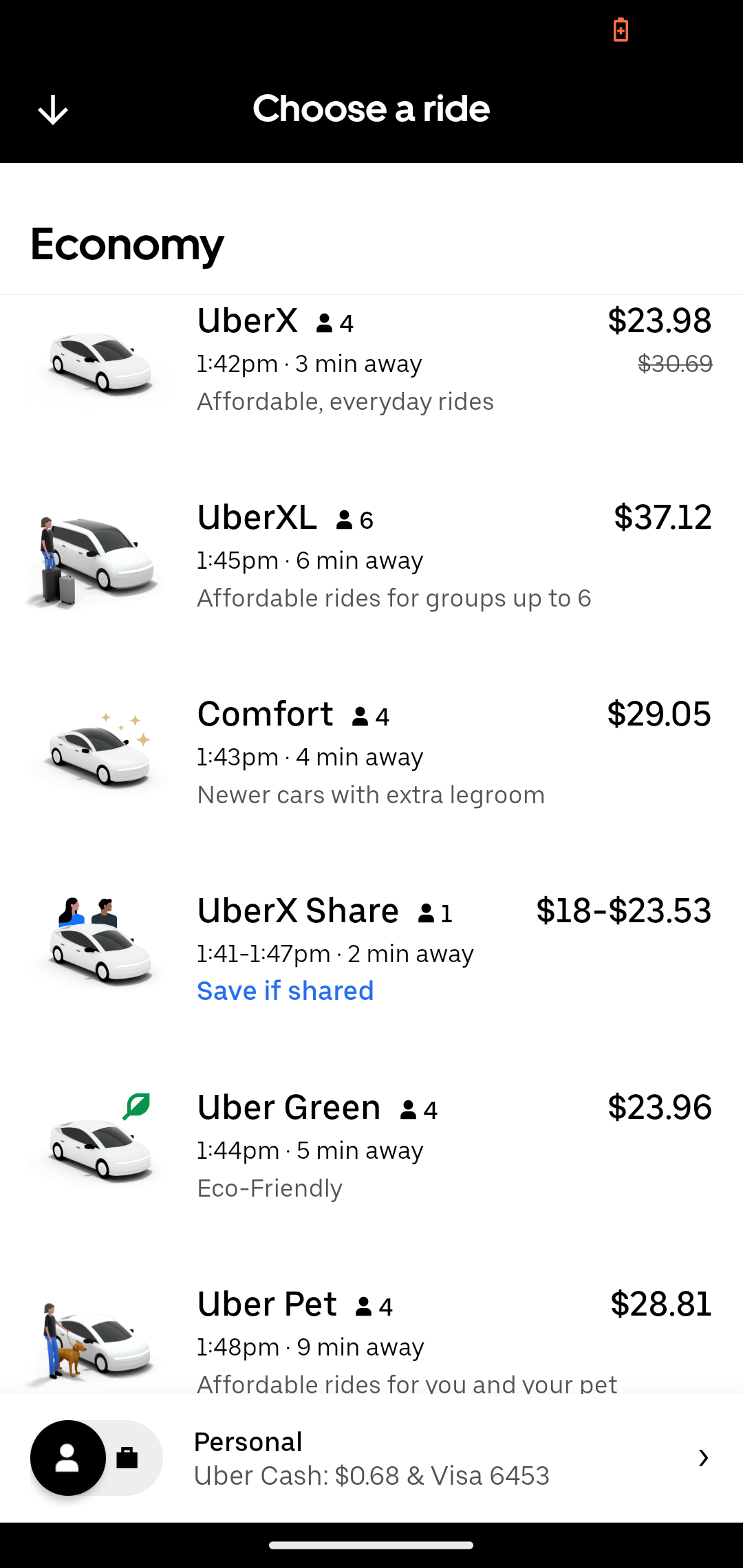

More Products

Conjoint: Limitations, Workarounds

We may fail to consider most important attributes or levels

- But we can consult with experts beforehand and ask participants duringAdditive utility model may miss interactions or other nonlinearities

- But this is testable & can be specified in the utility modelExploring a large attribute space gets expensive

- But we can prioritize attributes and use efficient, adaptive sampling algorithmsAssumes accurate hypothetical choices based on attributes

- But we can train consumers how to mimic more organic choicesParticipants may not represent the market

- But we can measure & debias some dimensions of selectionChoice setting may not represent typical purchase contexts

- But we can model the retail channel and vary # of competing productsParticipant fatigue or inattention

- But we can incentivize them and check for preference reversalsConsumer preferences evolve

- But we can repeat our conjoints regularly to gauge durability

Class script

- Let’s use PCA to draw some product maps.

Wrapping up

Homework

- Let’s take a look

Recap

Market maps use customer data to depict competitive situations

PCA projects high dimensional data into lower dimensional space w minimal information loss

Embeddings represent words as points in concept-space, enabling word-math

Conjoint analysis uses survey choice data to

- map markets - estimate product attribute utilities - help design products as bundles of attributes - predict how location choice leads to revenues, profits

Going further

- Intro to Large Language Models by Karpathy

- ChatGPT: 30 Year History | How AI Learned to Talk

- Mapping Market Structure Evolution by Matthe et al. 2023

- Deeper dive on data reduction: R4MRA, Section 8.2

- MGT 108R to design & run conjoint analyses

- Conjoint literature is huge. Good entry points: Chapman 2015, Ben-Akiva et al 2019, Green 2022, Allenby et al. 2019